The Truth About Checkpoint Security and Safety Tips To Prevent Malware

The rate at which open-source AI art generation scene is expanding could give the big bang some motion sickness. Every day, I see new custom-made models being shared with the world. But many of the people interested in AI art do not have backgrounds in computer science (including me). As such, they are painfully unaware of the risks involved with downloading models. Today, I want to go in-depth and make a PSA guide about Stable Diffusion model safety.

What is a Stable Diffusion Model?

First, we need to clarify what a “model” actually is. A model is an algorithm (ie. a computer program) that’s been trained on a dataset to recognize patterns; once it learns the patterns, it can make inferences—meaning the program can then come to it’s own conclusions about further sets of data12. In the case of latent diffusion models (AI art programs like Stable Diffusion), these algorithms studied billions of images to learn what they are and why they look the way they do, then inferred how to make similar images.

So Stable Diffusion itself is a model. But, unlike other AI art programs, Stable Diffusion is open-sourced and has the ability to be “retrained” to create new models. These new models are not made completely from scratch. Instead, they are made by adding new datasets to the original model. This is done to teach Stable Diffusion about something it did not know before, like a specific style of anime or how a certain person looks. It’s a bit similar to a video game expansion pack: you’re still playing the same game, but the expansion pack adds more content on top.

Anyone with sufficient computer resources can train a new model for Stable Diffusion, which is an incredible capability in it’s own right. But there is a big glaring issue with this…

What is a Pickle (in Python)?

Stable Diffusion was written in a programming language called Python. In some programming languages, there is a method in which a dataset can be converted into a stream of bytes for easy transfer and storage of the data: this is called Object Serialization34. In Python, this serialization is done using a Pickle. Try to think of a Pickle kind of like a ZIP file you use to store a lot of pictures for e-mailing to your relatives. However, the big difference between a ZIP file and a Pickle is that the ZIP file just releases the images from storage like a Charmander gets released from a Pokeball. But the Pickle, when “unzipped”, will recreate the object dataset. That means the Pickle file will essentially rebuild the stored program when it’s opened…in other words, it will execute code on your computer5.

How does this relate back to AI art models? Stable Diffusion uses Pickles to store models! That means when you load an AI model on your computer, it unpickles and runs code. This can be dangerous for your PC because Pickle files are inherently dangerous.

How Can Pickles/Models Be Dangerous?

Pickles, and therefore models you download, can be dangerous because they can include any kind of computer code inside of them. That includes lines of code that install malware.

Like we just learned, a pickle is like a Pandora’s box for Python code and, when you open that box, the program inside gets released onto your system. If he wanted to, a malicious person could include a line of code inside a Pickle that instructs your computer to download a virus or delete all its files…and once you unleash that Pickle on your own PC, you may not realize what’s been done until it’s already happened.

Here is the security warning the Python Software Foundation issues on their own website about pickle files:

Warning: The

Python Librarypicklemodule is not secure. Only unpickle data you trust. It is possible to construct malicious pickle data which will execute arbitrary code during unpickling. Never unpickle data that could have come from an untrusted source, or that could have been tampered with.

All of this boils down to one thing: downloading and using a Stable Diffusion model is just like downloading a .exe program from the internet and installing it. If you don’t know what the program contains or know who actually made it, are you willing to give it access to your computer?

How Can I Stay Safe When Downloading Models?

Now, I’m not suggesting you should never use any Stable Diffusion model ever. So far, I have not seen any confirmed cases of a malicious Stable Diffusion model in the wild. But you need to keep in mind that: the more popular this technology gets, the more likely a malicious actor will use it for nefarious purposes.

A pickle is not safe by itself, but there are some safe practices you can perform to reduce your own risk of malware or hack. They are:

- Only download from trusted sources

- Use pickle scanners whenever possible

- Unpickle files in a sandbox first

1. Use Trusted Sources

The best way of preventing malicious pickle operations is knowing who made the model’s code and how trustworthy they are. Is the source for your desired model a legitimate company, or a random person on the internet? Is your source susceptible to public scrutiny in the event they committed a cyber crime such as this? If the model was released by an individual, was it at least someone with a public profile (using their legal name, having social media for contact, etc) whose validity can be ascertained?

Always consider the trustworthiness of whoever pickled a model before running it on your system. For example, I felt relatively comfortable downloading the base models of v1.4 from StabilityAI and v1.5 from RunwayML because they are registered companies. You can easily research their business information, corporate leaders, and contacts. They have a good deal to lose if they are caught embedding viruses or stealing data from their customers in such a brazen manner. They would also be easy to identify if a legal complaint ever needed to be filed.

2. Pickle Scanners

There are a few open-source scanners available for download that will review Pickle code for malicious operations. What these scanners do is check what imports are included in the Pickle file; these imports are how a hacker could call up a malicious code execution. If there is a line of code that wants to import something “extra” (not needed for the actual model being loaded), it will give you a warning about that pickle file. This is an added layer of protection that needs to be used BEFORE you ever load a pickle file; it’s not like your average Windows anti-virus that monitors in real-time for infections.

Two of the most popular model repositories already have scanners in place that automatically check uploaded pickle files and display if any suspicious imports are present in them. These model sites I’m talking about are:

But still, don’t expect these scanners to be a completely soundproof malware prevention method in their own right. I don’t program in Python myself so I don’t know all the possibilities, but I have read an argument or two from actual programmers explaining how malicious code could be called up in a pickle file through various indirect commands6.

Pickle Scanners to Try

Here is a list of pickle scanners I know of that you can test out:

- Python Pickle Malware Scanner from the Python Software Foundation

- Stable Diffusion Pickle Scanner by Zxix on Github

- Stable Diffusion Pickl Scanner GUI by DiStyApps (this is just a GUI for the Python Software Foundation scanner; if you don’t feel comfortable installing this as an .exe on your computer, using the commands in the first scanner link will do the same thing).

3. Manually Review the Code

If you really want to get nitty-gritty with your safety concerns, you could try reading the code inside of the pickle before using it. Normally, this would not be possible because Python pickles are not human-readable when opened in a txt format.

However, there is a software called Fickling that makes this possible. Fickling can do two relevant things for us:

- It can symbolically execute the code within a pickle file and output it as a readable text for manual review7; and

- It’s Check Safety command can be run, which will check for certain classes in the pickle file that may be malicious

Now using Fickling will require some basic understanding of coding: you’ll need to enter some pre-written commands into a command terminal. If you output a readable file, then you’ll need to study up on how to read Python language in order to actually see malicious code.

Alternatives to Downloading CKPT Models

Clearly, we’re not going to stop using extra models. One of the greatest strengths of Stable Diffusion is that users can retrain it to make their own custom models. Midjourney and Dall-E cannot do that! So, now that I’ve scared you out of downloading 100 models to play with, you might be asking: is there any safer way to have custom models for Stable Diffusion? And yes, yes there is. There are 2 specific alternatives to using Stable Diffusion models in the standard pickle format:

- Use Safetensors format models instead

- Train your own models or embeddings using Dreambooth or Textual Inversion

For the sake of my own sanity, I would dive into the process of training models in this article. That’s a topic worthy of it’s own guide. For that reason, I’m just going to cover the topic of Safetensors…

Safetensors

This might be my favorite alternative to pickle models to date. If you want to try out someone else’s model but you don’t want to risk arbitrary code execution on your computer, then you can convert their model to the Safetensors format8.

But what is Safetensors? It’s an alternative simple serialization format being developed by Huggingface, Inc. that does not allow for arbitrary code execution9!

What’s even better is that HuggingFace has a converter on their website. If you find a standard pickle-format model you like in their repository and you want a Safetensors version, you can run it through this page.

In fact, some of the models already have the SafeTensors format available in their repository, so look for it in the model’s “Files and versions” section before you do the conversion request.

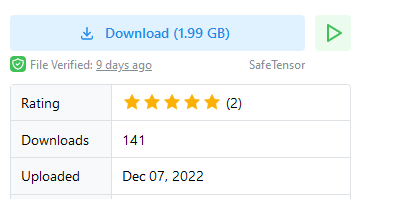

CivitAI has also implemented SafeTensors support on their website; if a model is available with a SafeTensors file, it will specify that next to the download button10:

Conclusion

The number of AI art models available for download are expanding with each passing day. It’s very tempting to catch ‘em all and go nuts testing out each new style. But for the safety of your computer and it’s contents, it’s highly recommended that you avoid loading pickle files from sources you don’t know or trust.

If you have already downloaded models and want to check for malicious code in them, I suggest you scan them as soon as possible. Thankfully, the creation of the SafeTensors format is making model trading a much safer activity; as this simpler model gains popularity, the risk of dangerous pickle files will be greatly diminished. If there’s a certain model you want to try but you can’t find a SafeTensors version of it, try contacting the site hosting it and ask when they will start supporting the new format.

Further Reading

If you found this article helpful, here are a few more you may like:

- The Complete Beginner’s Guide to the Stable Diffusion Interface

- The Beginner’s Guide to Img2Img in Stable Diffusion

References

1https://chooch.ai/computer-vision/what-is-an-ai-model/

2https://www.intel.com/content/www/us/en/analytics/data-modeling.html

3https://stackoverflow.com/questions/2170686/what-is-a-serialized-object-in-programming

4https://www.synopsys.com/blogs/software-security/python-pickling/

5https://docs.python.org/3/library/pickle.html

6https://nedbatchelder.com/blog/201206/eval_really_is_dangerous.html

7https://blog.trailofbits.com/2021/03/15/never-a-dill-moment-exploiting-machine-learning-pickle-files/

8https://huggingface.co/docs/hub/security-pickle